Creating a Chat Application to communicate with ChatGPT, powered by Altair SLC — Problem Setup

With OpenAI releasing the ChatGPT “gpt-3.5-turbo” model in early March, users now have the ability to generate new and innovative technologies surrounding the ChatGPT Large Language Model (LLM). What’s more, this technology is relatively simple to connect to via API; once a user has signed up for an account on OpenAI, users will have access to the “chat completion” endpoint which allows users to create sessions with the ChatGPT model. This endpoint can be reached a number of ways, however the easiest way is through OpenAI’s Python library openai. Using this library, users can simply authenticate their python session and pass the text of the chat to ChatGPT, which then the user gets a structured response.

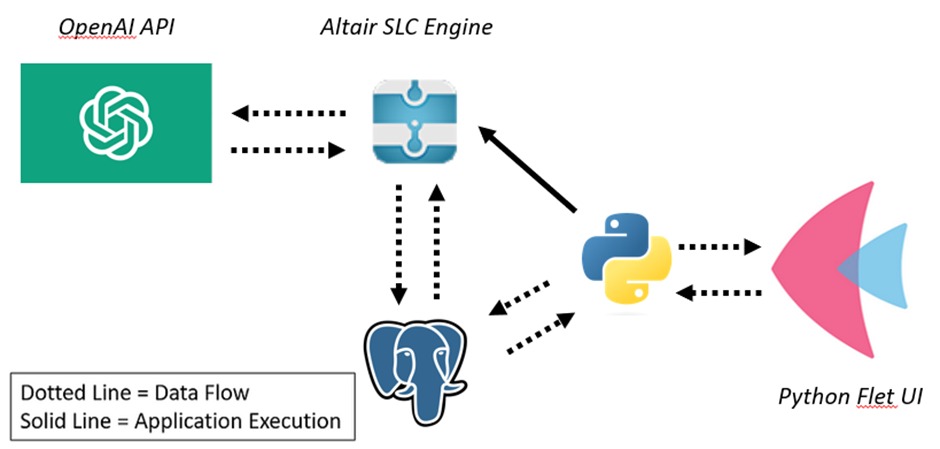

To celebrate this release and showcase one example of an Altair technology interfacing with this LLM, I’ve decided to build a simple chat application that takes a user text input, passes that input to the “gpt-3.5-turbo” model, parses the response, and submits that into the chat. The User Interface will be built using Python Flet, the database I’ll use to store the chat messages will be a simple PostgreSQL database, and the software to connect to the OpenAI API will be Altair’s alternative SAS language environment, Altair SLC. To build the SAS Language program, however, I’ll be using the Altair Analytics Workbench in workflow mode. Breaking down the data and execution flow, it will look something like this…

The Flet UI is built in python, which allows me to call Altair SLC from the command line. The user will type in some text, hit submit which tells python to write the text to the PostgreSQL db and execute the Altair SLC engine, which then connects to the OpenAI API and gets a response.

What’s nice about this set up is that I can remove the Altair SLC component from the Python Flet UI and connect it to anywhere; if I wanted to connect Altair SLC to a custom website or other UI, I would have the components already built to do that and would simply need to provide where the user input is coming from.

If interested, you can see the full list DA products here which includes RPA, data automation, and machine learning tools, all accessible with our Altair Units — our licensing model which allows the use of any of the products without needing to purchase a license for each.

Part 1: Chat Application Setup, OpenAI connection

Python Flet Chat Application + Chat Schema

For this project I kept the chat application pretty bare bones: I wanted a simple text box for the user to type in the question, a send button, and a chat history of the question and responses. To do this, I took the first three steps of the Flet guide on building a chat application…

…and dissected their functions for my own project. The Python Flet application would control the following:

⦁ The submission of user text — This would be from the Flet guide.

⦁ Writing/reading of text responses from both the user and ChatGPT — PostgreSQL will be written to by the user clicking “Send” for his/her text and by Altair SLC upon receiving a response from the ChatGPT model.

⦁ The execution of Altair SLC to submit data to ChatGPT — Altair SLC will run the .sas script version of the workflow upon the user submitting their text; the Python Flet application will call it using the below command line configuration.

WPS refers to World Programming System, which Altair acquired in December of 2021. Users will need to have both the Altair Analytics Workbench and the Altair SLC engine installed.

The flow of data would be fairly simple, with PostgreSQL storing the question and responses from the user and ChatGPT. I used a remote PostgreSQL location as I also have plans on uploading this application to the server component of Altair SLC, the Altair SmartWorks Hub, however for this article everything will stay on the desktop.

The schema for the PostgreSQL table is also simple, with the table keeping track of the following:

⦁ Author of the message, either “user” or “chatgpt”

⦁ The message itself

⦁ Whose turn it is, either “user” or “chatgpt”

⦁ A boolean flag if the conversation is still active or not

OpenAI Setup

In order to get access to the ChatGPT LLM, I needed to set up an account at https://platform.openai.com/ and sign up for a paid account. Once created and a secret api key has been generated, I could simply communicate with the chat completions endpoint similar to the below code…

Part 2: Altair SLC + OpenAI connection

Altair SAS Language IDE vs the Workflow Editor

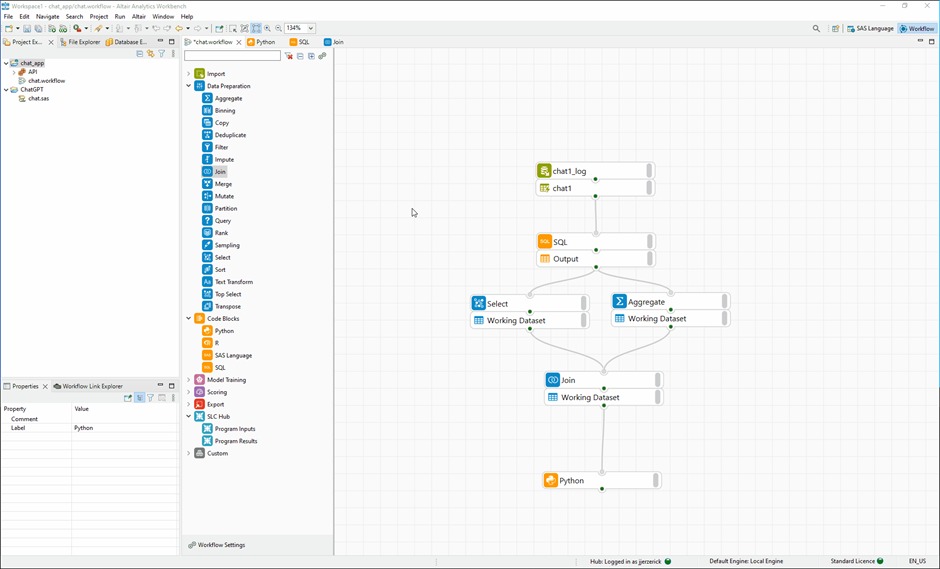

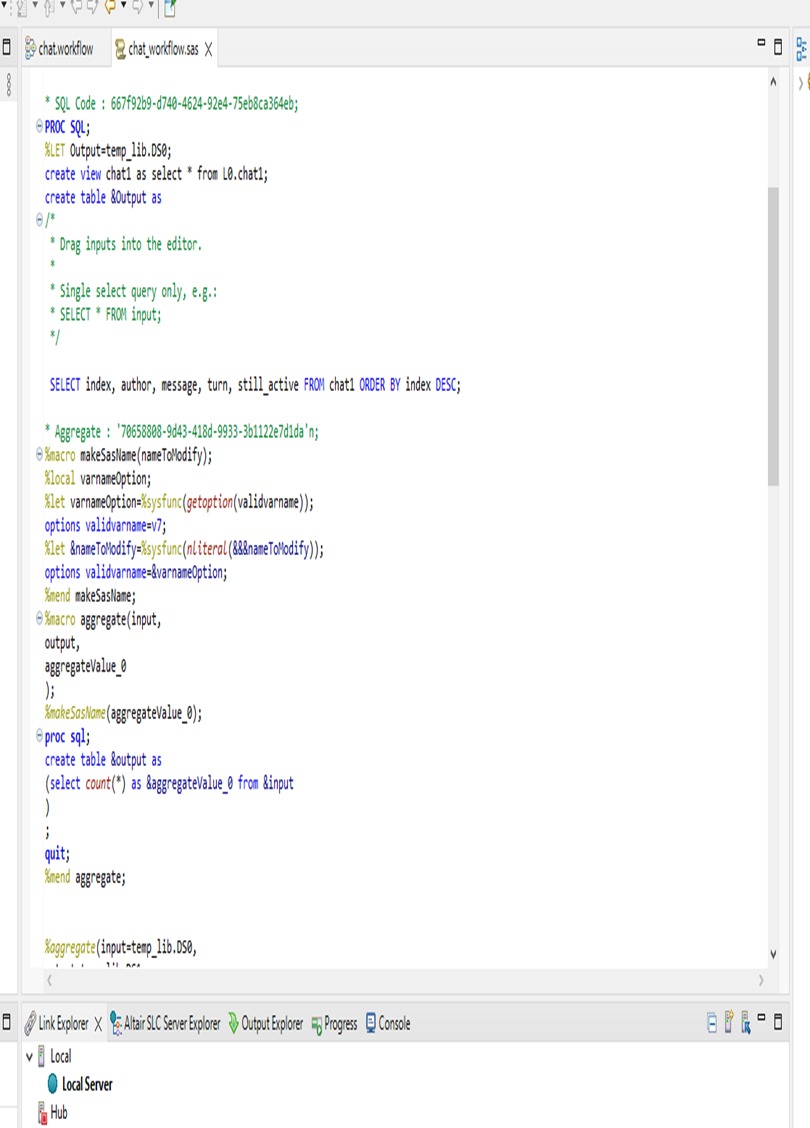

Altair Analytics Workbench is Altair’s proprietary software that allows users to build out and execute SAS language scripts without needing to license third party products. It has a two different ways of building out SAS language applications: the first is an IDE-like environment, which allows users to code the SAS language, while the second is a workflow environment which gives users the ability to drag and drop blocks into the interface. Workflows can be converted into .sas scripts pretty easily as seen in the below gif; this is also a required step to execute them via command line. I decided to go with the workflow configuration and insert a “python code” block to connect to the chat completion endpoint.

ChatSLC Workflow Overview

Altair Analytics Workbench has a very intuitive interface for building workflows in a no-code/low-code environment. For building out the connection between the Flet application and ChatGPT, the workflow will need to execute the following…

⦁ Pull in the user messages from the PostgreSQL

⦁ Get the latest message from the group by looking at the index of the conversation

⦁ Using the Python library openai, pass the text of the message to chatgpt and write the response to PostgreSQL again

The first part of this was executed pretty easily: pulling in the data from the PostgreSQL database required just the hostname/port number and the associated credentials. Once those were established, I could then submit a SQL query using the “SQL” block and get the data I needed…

Once I had the latest message, I passed that as an import to a “Python” block where I injected the OpenAI code (from above). I also included code that parsed the response and wrote to the PostgreSQL database. Below is the resultant workflow; I then exported this workflow as a .sas script and added the command line execution to the Python Flet application to execute upon users submitting a message!

Part 3: Testing It All Out

After testing each piece individually, the last step was to test out the full application. Needless to say I was quite happy with the results; see the below gif!

A simple chat application with Altair SLC + OpenAI running in the background

Final Thoughts

While this project was fun, using Altair Analytics Workbench and Altair SLC in this way isn’t leveraging all it has to offer. This line of products Altair offers is one of the few on the market that allow for the execution the SAS language without needing to license third-party products. It fits perfectly for organizations who are looking to switch to more modern data analytics languages (i.e. Python, R) without needing to convert all their SAS language work at once. Anyone who has worked in the SAS language will be familiar with the Analytics Workbench, whether that is coding, data analysis, or workflow building.